Since I don’t expect to have time in the near future to work on V-HACD , I am writing this post to keep track of the performance improvements obtained after the latest optimizations I added. The source code is available here.

Experimental Evaluation

Table 1 compares the computation times (cf. Section "Machine description") of V-HACD 2.0 and V-HACD 2.2 obtained by using the configuration described below (cf. Table 2). These results show that V-HACD 2.2 is an order of magnitude faster than V-HACD 2.0. The gains are mainly obtained thanks to the convex-hull approximation (cf. Section "Updates"). The OpenCL acceleration provides 30-50% lower computation times when compared to the CPU-only version of V-HACD 2.2.

Experimental Evaluation

Table 1 compares the computation times (cf. Section "Machine description") of V-HACD 2.0 and V-HACD 2.2 obtained by using the configuration described below (cf. Table 2). These results show that V-HACD 2.2 is an order of magnitude faster than V-HACD 2.0. The gains are mainly obtained thanks to the convex-hull approximation (cf. Section "Updates"). The OpenCL acceleration provides 30-50% lower computation times when compared to the CPU-only version of V-HACD 2.2.

The code is still not fully optimized and more improvements could be expected!

V-HACD 2.0 (CPU only) | V-HACD 2.2 (CPU+GPU) | |

army_man | 650s | 65s |

block | 220s | 26s |

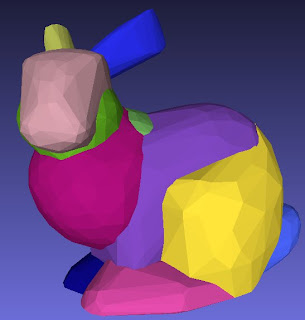

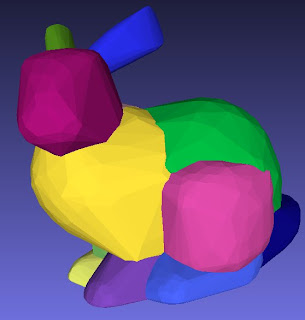

Bunny | 317s | 30s |

Camel | 388s | 34s |

Casting | 744s | 79s |

Chair | 408s | 42s |

Cow1 | 314s | 30s |

Cow2 | 349s | 32s |

deer_bound | 411s | 34s |

Parameter | Config. 1 |

resolution | 8000000 |

max. depth | 20 |

max. concavity | 0.001 |

plane down-sampling | 4 |

convex-hull down-sampling | 4 |

alpha | 0.05 |

beta | 0.05 |

gamma | 0.0005 |

delta | 0.05 |

pca | 0 |

mode | 0 |

max. vertices per convex-hull | 64 |

min. volume to add vertices to convex-hulls | 0.0001 |

convex-hull approximation | 1 |

Updates

V-HACD 2.2 include the following updates:

- OpenCL acceleration to compute the clipped volumes on the GPU

- Convex-hull approximation to accelerate concavity calculations

- Added local concavity measure to clipping cost calculation

- Changed command line parameters

To do

When I'll find time (probably not soon), I need to do the following:

When I'll find time (probably not soon), I need to do the following:

- Test the code and build executables for different platforms (i.e., Linux, Mac OS), and

- Update the Blender add-on to make it work with the new command line parameters.

Machine description

- OS: Windows 8.1 Pro 64-bit

- CPU: Intel(R) Core(TM) i7-2600 CPU @ 3.40GHz (8 CPUs), ~3.4GHz

- GPU: NVIDIA GeForce GTX 550 Ti

- Memory: 10240MB RAM